The notes below formed the launchpad for a discussion I had on the NosillaCast #1015 with Bart Busschots. In the first part of our discussion, we talked about whether Bart was disappointed in me when I used ChatGPT 4o to write some JavaScript for me to embed in TextExpander instead of writing it myself. In the second half we discussed a research article that studied the significant reduction in activity on StackOverflow since ChatGPT took off. The notes below aren’t a transcript, but rather our outline for the discussion. There are a few links (and images) below that might be useful to follow when listening to the discussion.

Part 1

Allison

You’ve just heard me talk about how I used ChatGPT 4o to write a JavaScript script for TextExpander to copy the URL from Transmit to a usable URL for my blog posts. On social media when I posted the article you just heard, I said, “I hope Bart isn’t disappointed with me.” His response surprised me and I thought it would be fun for you to hear it first hand. Were you disappointed in me for using AI, Bart?

Bart

This topic fascinated me because it illustrates my current thinking on AI perfectly.

My current hypothesis is that LLMs are wonderfully, brilliantly, and universally … mediocre! And that’s a good thing!

A lot of people tend to judge AI by asking it to do something they themselves are good at, and inevitably it does a worse job than they would, so they smugly dismiss AI as a silly over-hyped toy and carry on as before. But IMO, that totally and utterly misses the point!

Given how LLMs work, it’s not even the slightest bit surprising they are mediocre at things, what makes them powerful is that they are mediocre at everything! They are the most amazing generalists, so their value is not in doing the things you’re good at — you don’t need help with those, you’re good at them! Where they provide value the infinitely more things you’re less than mediocre at!

If you are less than mediocre at something, the chances are ChatGPT is better at it than you, so it can help!

So, if I put my silly scoffing hat on I can dismiss this solution as stupidly inefficient and overly complicated, I mean, this is literally a one-liner:

TextExpander.appendOutput(TextExpander.pasteboardText.replace('sftp://138.68.36.215//srv/websites/podfeet/public_html/', 'https://www.podfeet.com/'))

(Yes, that works!)

But I’m a better-than-mediocre programmer, so why is it surprising that I can write better code than ChatGPT? But what percentage of humans on planet Earth are less than mediocre programmers? What percentage of humans could not get any working solution without help? Conservatively, 95%? Probably more! So, how,exactly, is it not really impressive that ChatGPT provided a working solution so easily?

Part 2

Allison

I came across a journal article entitled, “Large language models reduce public knowledge sharing on online Q&A platforms“. I’m not normally one for reading long-form research papers, but I found this one from the US National Academy of Sciences fascinating.

We know that the use of LLMs for programming tasks is on the rise (I can personally attest to this) and that the capabilities in this area have come from absorbing human knowledge on programming-specific help sites like Stack Overflow. At Stack Overflow you can post a programming question, and people of all skill levels will jump in and try to help you. People’s answers (and questions for that matter) get up and downvoted so you can tell which answer is probably the one you should follow.

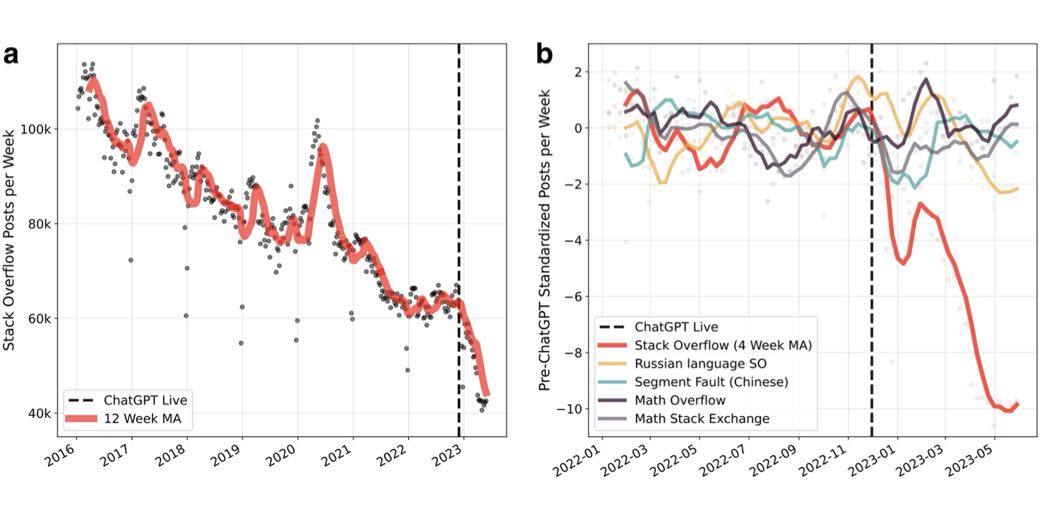

The researchers in this paper analyzed the post activity on Stack Overflow from 2016 until the middle of 2023 and then analyzed the changes seen since ChatGPT entered the scene at the end of 2022.

They found that the number of weekly posts decreased at a rate of about 7,000 posts each year from 2016 to 2022. In just the first 6 months after the release of ChatGPT, the weekly posting rate decreased by around 20,000 posts.

While that is extraordinary, we all know that correlation is not causation. To cement that in your brain, search for Tyler Vzgen’s Spurious Correlation graphss where he correlates things like the number of fire inspectors in Florida against solar power generated in Belize.

To try to determine whether ChatGPT was the cause of the dramatic change in the downward slope of posts on Stack Overflow, the researchers gathered data on similar sites in regions where access to ChatGPT is limited. They found that “activity on Stack Overflow decreased by 25% relative to its Russian and Chinese counterparts, where access to ChatGPT is limited, and to similar forums for mathematics, where ChatGPT is less capable.”

Both of these graphs are linked in the shownotes if you want to see a visual representation of these results.

The significance of this research is that by its very ability to consume the content where programmers are sharing knowledge and solving problems, ChatGPT is destroying the creation of that content. Seems to be a perfect example of the saying “a snake eating its tail.”

Thoughts:

- LLMs could stall in categories like this

- Since newer languages don’t seem to be as well covered by AI, maybe that’s where new knowledge gets added until the languages mature

- In the same vein, let’s say there’s a major update to JavaScript, would the questions and answers take off again in StackOverflow because the information doesn’t yet exist?

- What if easy-to-answer questions (like mine) don’t require experts to answer, is that a benefit to how they spend their time?

- Just interesting: When they compared to services that don’t have ChatGPT like Russia, you can see in their graphs the dip in conversation when Russia invaded Ukraine

- This knowledge is now closed-source

Bart

I linked to this video from Tom Scott on Security Bits when he first released it in early 2023 because it struck me as the right question to ask, even though Tom was very clear that not on did he not know the answer, no one did.

The question Tom argued we need to be asking is where AI is on the sigmoid curve all new technologies seem to follow. A sigmoid starts with very slow growth, then growth accelerates dramatically, sometimes for a long time, sometimes for a short time, and then the growth slows and progress plateaus.

Clearly, the change from ChatGPT3 to ChatGPT4 was a dramatic improvement but was that just the very start of the up-tick in the sigmoid curve, and things are going to improve faster and faster for a long time to come, or was that big step the biggest step we’ll have, was that the steep part of the sigmoid, and are we about to see progress slow and LLMs approach their natural plateau?

We definitely couldn’t tell where we are on the curve a year ago, and even today it’s not at all clear where we are, but my sense is we’re approaching the switch in direction at the end of the steep part of the curve, and progress is going to slow down, at least on LLMs.

There will most likely be more ChatGPT moments in the future, but we’re going to need to find some other new technique to take us there, I don’t think LLMs have that much more to give. If you’ve followed the growth of AI over the last 5 decades you’ll have seen that story before — cool new idea, big progress, everyone thinks we’re on the brink of truly artificial intelligence, and then, much sooner than the proponents hoped, it plateaus again until the next new idea comes along.

Great discussion! Bart’s take on LLMs as powerful generalists is spot on, and the Stack Overflow impact highlights both the benefits and challenges of AI adoption. The plateau theory is intriguing—can’t wait to see what innovation drives the next leap!