I know I’m a weirdo with a podcast and blog posts for everything that’s in the show. I’ve told the story many times of how someone told me I shouldn’t do that because maybe people would read instead of listen. I remember putting a horrified look on my face as I said, “Oh gosh, you’re right! It would be terrible if they got the content the way they wanted!”

I’ve also told the story of the gentleman who wrote to me ages ago to tell me that he’s deaf/blind and that he reads my content using his Braille Display. He didn’t want to interact any more than that (though I would have loved to have talked to him more), and he just wanted me to know he was out there. I declared on the show that I would always keep blogging the podcast content, if only for him.

When we got back from CES, I talked about how great it is that for the first few months of the year, I don’t have to work very hard to get the podcast out because we have so many wonderful interviews to play. Steve does most of the work publishing the videos to YouTube, embedding them on podfeet.com, and exporting the audio files for the podcast.

But I got to thinking: if I’m not writing very many blog posts because of all the interviews, then the people who prefer to read are being left out in the cold. Steve puts a lot of context before the embedded video so that’s great. But what if you can’t hear the video or the audio of the interview?

You could wait until the podcast gets delivered on Sunday night when a full transcript of the show is available, but why should you have to wait? What if you’re like my buddy Niraj who is hearing impaired and likes reading my blog posts? Or what about the deaf/blind guy?

MacWhisper

I decided to create a curated, edited transcript of the conversations and then Steve can include them in the blog posts below the video. I start with MacWhisper by the lovely Jordi Bruin. Once I got a good process going with MacWhisper to make the transcripts, I realized it could also make really great closed captions for the video as well. I’ll get into the details of how this works, but let’s back up a little bit first.

Subtitles for YouTube

Steve imports the raw audio file into Final Cut, and tops and tails it with the intro and outro graphics and music. Then he adds a lower third to show the name and title of the person I’m interviewing. He exports the audio as an uncompressed AIFF and saves it to my desktop so I can play it for the NosillaCast.

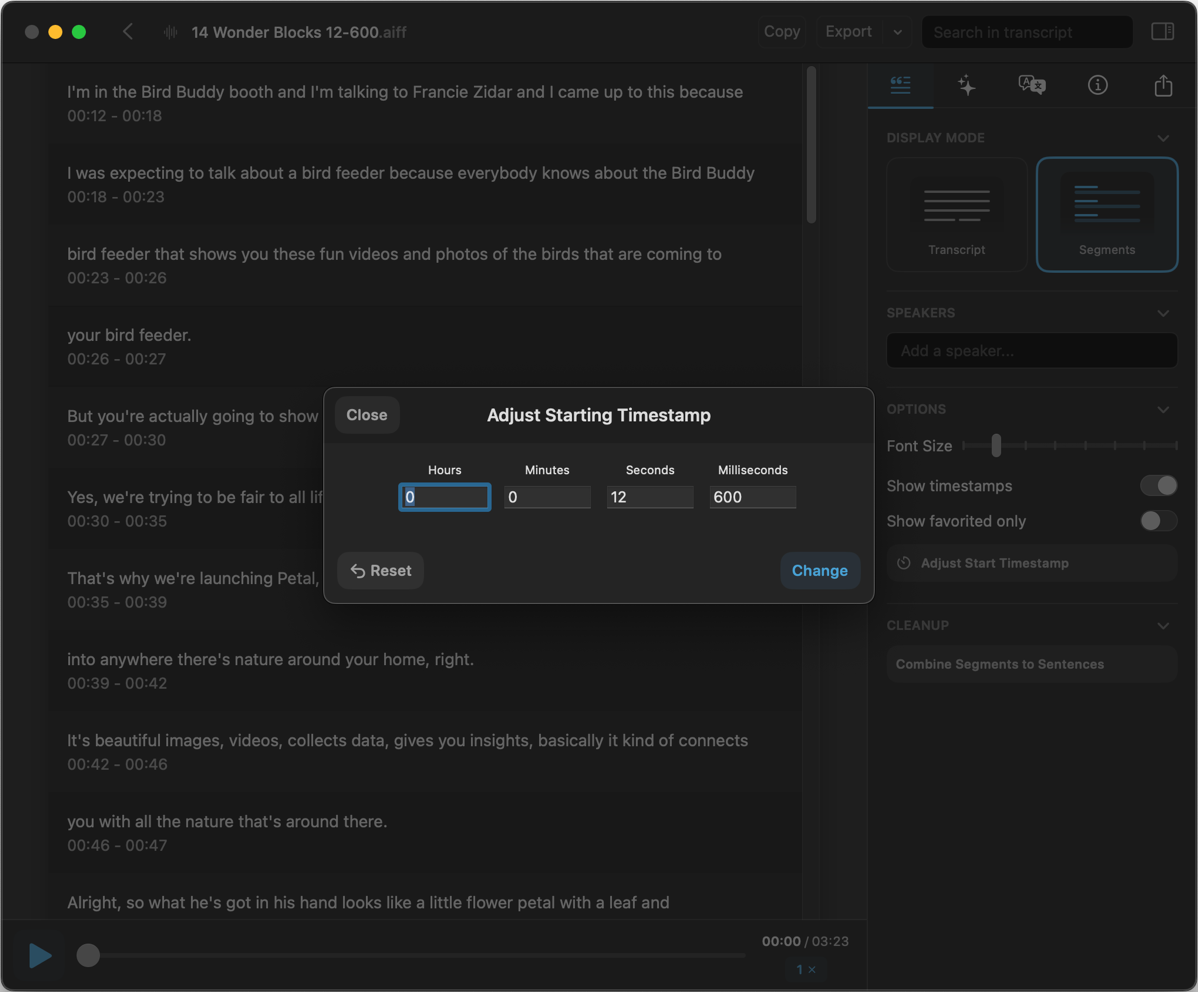

While the intro music for every video is the same, the time I actually start talking after the video starts varies slightly. When he saves the audio file to my desktop, he adds to the name the time offset from the beginning of the video till I start to talk. For example, the name would be appended with 12-600, which tells me the offset is 12.600 seconds in the video version.

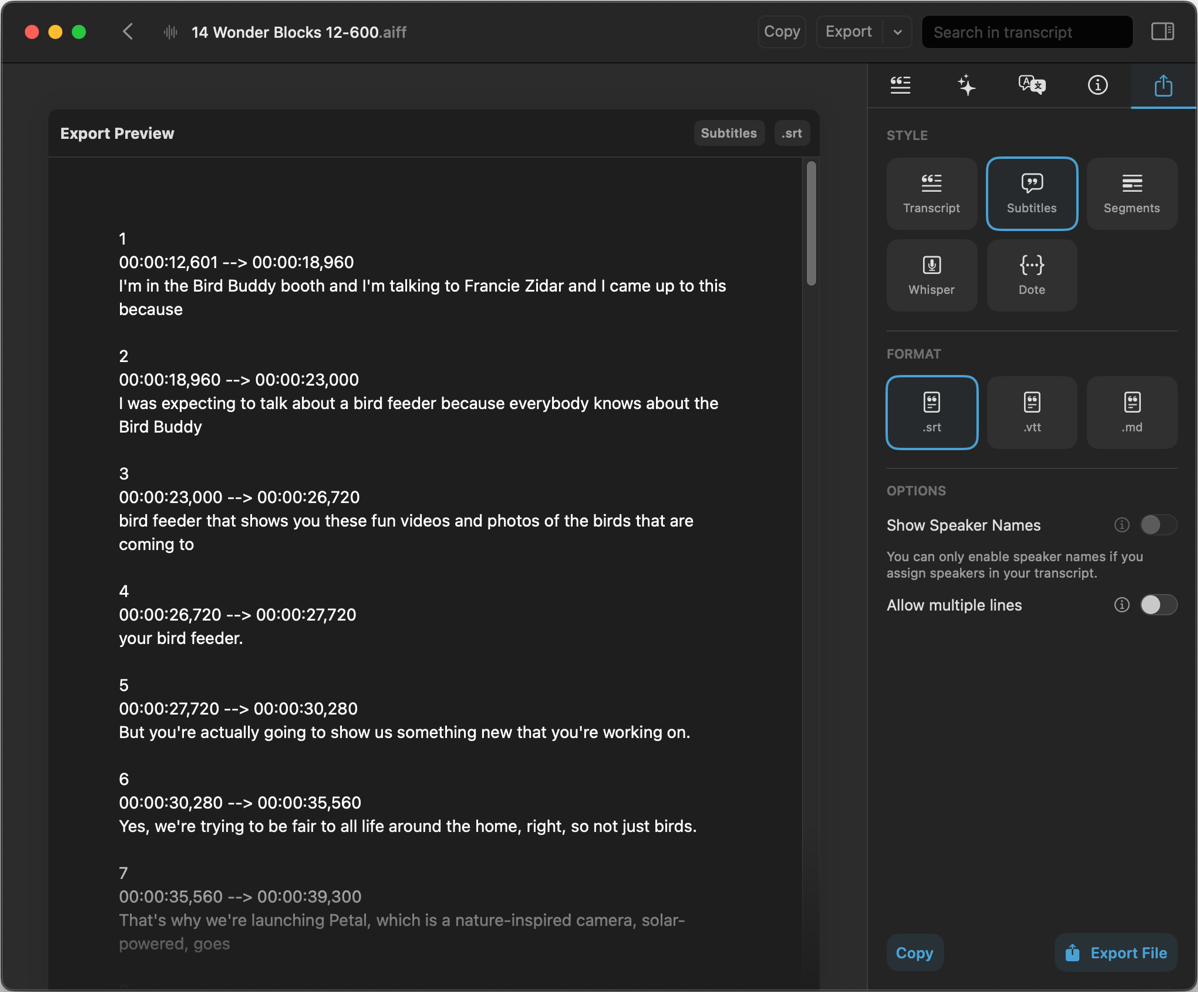

MacWhisper, amongst its many talents, can create subtitles to be embedded into the video on YouTube. I know YouTube can automatically do that, but we want control of the process to make them as good as they can be. After it has transcribed the audio, I select the segments option. You know how when you have subtitles on, you see a short set of words and they stay on screen for a few seconds, and then you see another bit of text? Those are the segments. MacWhisper breaks the text into these logical segments and notes the time they should be onscreen.

Since the video won’t start playing the audio for 12+ seconds, I need to tell MacWhisper to offset the segment timing by that amount. That’s why I need to know that a particular video’s audio starts 12 sec and 600 ms from the beginning.

If it had been up to me, I’d started every single closed caption at around 12.5 seconds, but Steve insisted the closed captions had to be precisely in sync, so we do it down to the millisecond.

After entering the offset in MacWhisper, I simply export the segment as an SRT file and put it on his desktop. Armed with the captions, he can upload the video and SRT files to YouTube and write up the video description while I work on the transcript. Creating this SRT file for a 3-7 minute video takes a minute or two for MacWhisper to do the transcription and then just seconds for me to do the offset and export to SRT. “Easy peasy” as Niraj always says.

Transcripts

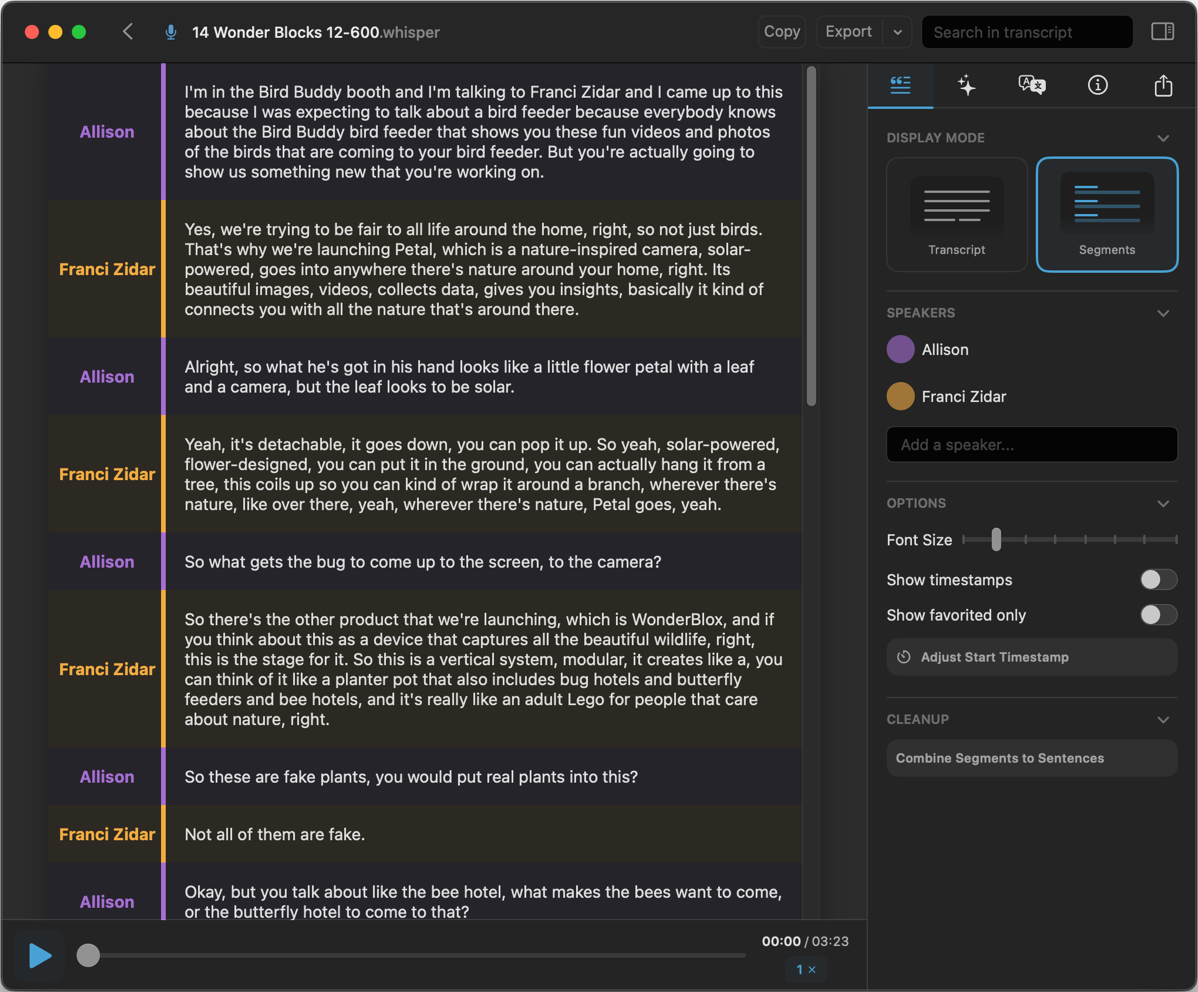

While generating the closed captions is a cakewalk, the transcripts are quite a bit more work. We don’t want segments like we do for the video; we want full paragraphs of each person talking. When I switch to the Transcripts section, there’s an option to combine segments into sentences with the click of a button, I still need to combine the sentences into paragraphs. But before that, I have to have names for each sentence.

If you have a stereo file that you can separate into two separate mono files, you can upload them to MacWhisper using their (beta) Podcast feature, and MacWhisper will automatically separate the transcript by voice and assign names to who’s talking. Unfortunately, we only record in mono so that’s not possible.

In the MacWhisper interface, you can add speaker names, and it saves them for future use. I select my name and then type in the name of the person I interviewed. I double-check the transcript to ensure the person’s name is spelled correctly as I introduce them, and do a search/change all if not.

At this point, I can hover over a sentence and type “1” or “2” depending on who’s talking. This puts (in color) the person’s name to the left of the sentence and offsets it visually so it’s very easy to tell where I need to keep working. If I’m just reading the text, this hover and then typing a number works quite well to assign the names.

If I’m unsure who’s talking, I select where I’m working and then hit the play button at the bottom of the window. If I just let it play while I assign the names, simply hovering over a segment doesn’t always seem to select it. I find myself clicking to get to the right sentence, and one click in the wrong area flips me into edit mode, so I end up typing a “2” in the middle of someone’s sentence. If I click to the far left of the sentence, then that reliably lets me assign the name, but that’s a tricky area to precisely click. I find myself stopping and starting the audio in order to get the names assigned reliably so it’s a bit tedious.

Once the speakers have been identified, I need to shift-select sequential sentences by the same person, then right-click in the area and select merge from the dropdown menu. Jordi has promised me a keystroke for merge but it’s not there just yet.

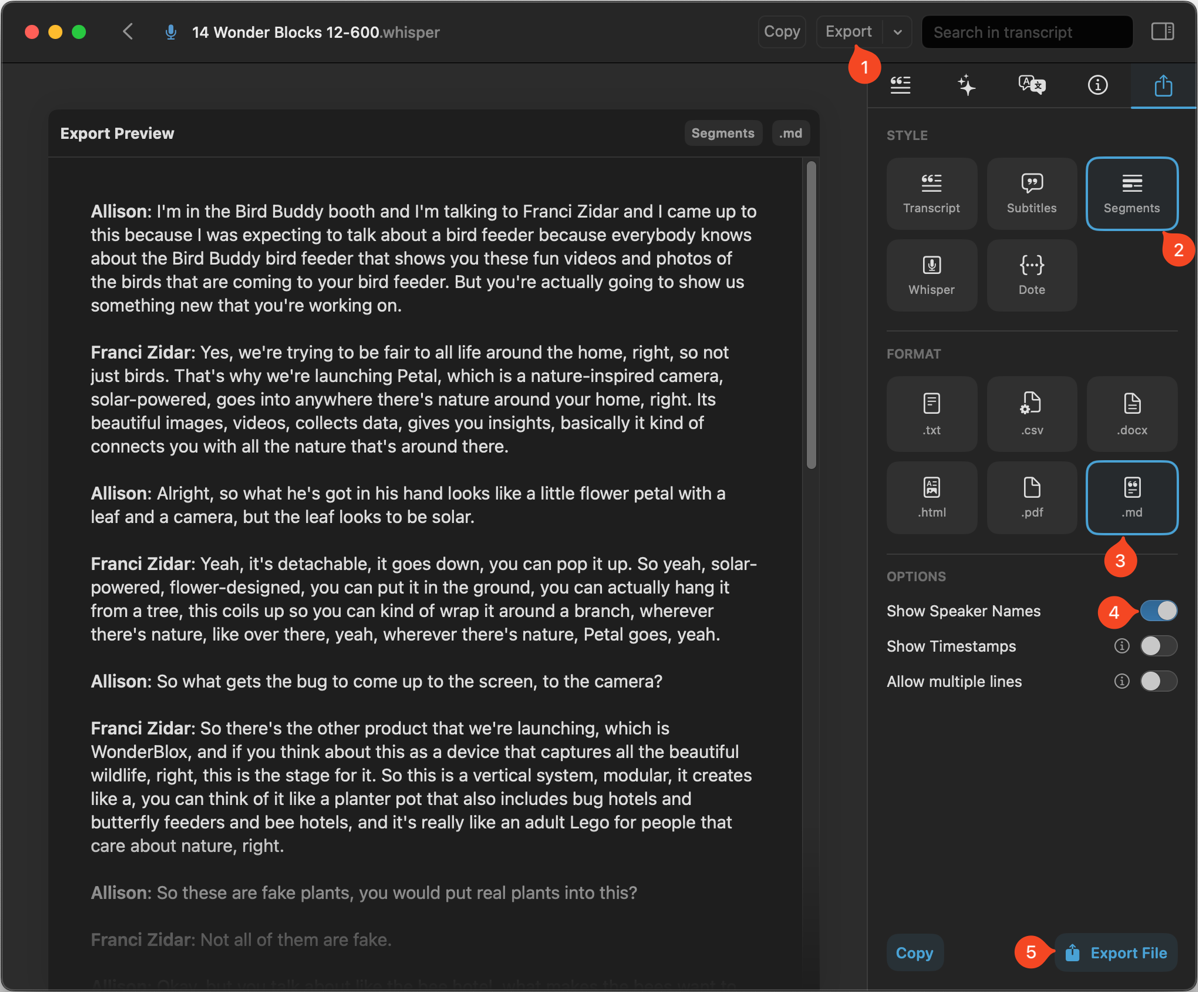

Once I’ve manually named the segments and merged them into paragraphs, I can go back to Export. I select Markdown as the format, toggle off timestamps, and toggle on show speaker names. I ship the resulting Markdown file to Steve and he completes the blog post with the transcript.

The process of combining sentences and assigning speakers doesn’t take that long but it’s tedious and uninteresting to me to do such repetitive work.

ChatGPT + MacWhisper

After doing a dozen or so of these Markdown transcripts, I had an idea. I wondered if the paid-for version of ChatGPT could create the transcript automatically for me. My first prompt was:

Can you transcribe audio files and assign names to the different voices if I tell you the names?

It lied and said:

Yes! You can upload your audio file, and I can transcribe it for you. If you provide the names of the speakers and some guidance on how to differentiate their voices (e.g., pitch, accent, speech patterns), I can label the transcript accordingly.

I say it lied because after I gave it the audio file, it responded with:

It looks like I can’t run Whisper directly in this environment. However, you can transcribe the audio using MacWhisper (which you already have installed) or another tool like oLlama or OpenAI’s Whisper on your Mac.

Once you have the raw transcription, you can upload the text here, and I’ll format it with speaker names in bold and structured in Markdown as you requested.

Other than misleading me, I was curious how it knew I had MacWhisper installed! I asked it how it knew that. My creepy feeling was completely calmed by its answer:

I remember because you told me in past conversations! I don’t have access to your Mac or any way to see what apps you have installed, but when you mention tools like MacWhisper or TextExpander, I take note so I can provide more relevant help in the future.

I searched through my ChatGPT conversations, and sure enough, I had asked it to help me embed some complex scripts it wrote for me as TextExpander snippets, and I’d asked it for help finding undocumented features in MacWhisper. Rather than creepy, I now think it’s nifty.

Ok, back to the problem to be solved. After ChatGPT told me it couldn’t do the transcription, we had some “dialog” about how to proceed, where it suggested I do manual assignment of names and merging of text in MacWhisper. Uh, what do I need you for then exactly? I say we had “dialog” because I badgered it into a better plan.

ChatGPT eventually suggested a compromise where I have MacWhisper do the initial transcription of the audio, and export a text file, and it would take it from there. It wrote:

Just upload the raw text file from MacWhisper, and I’ll analyze patterns in the speech to detect when the speaker changes. I’ll do my best to segment it properly based on sentence structure, pacing, and context.

On its first attempt, it correctly assigned the names to the two different voices by sentence. I think that’s extraordinary — it never heard them. It forgot to combine the sentences as paragraphs as I’d requested, but when reminded, it did an admirable job.

I had already run this particular interview through my manual process in MacWhisper, so I compared the results of the name assignments and they were identical. Truly extraordinary that it can do this using only structure, pacing, and context.

Save that Prompt

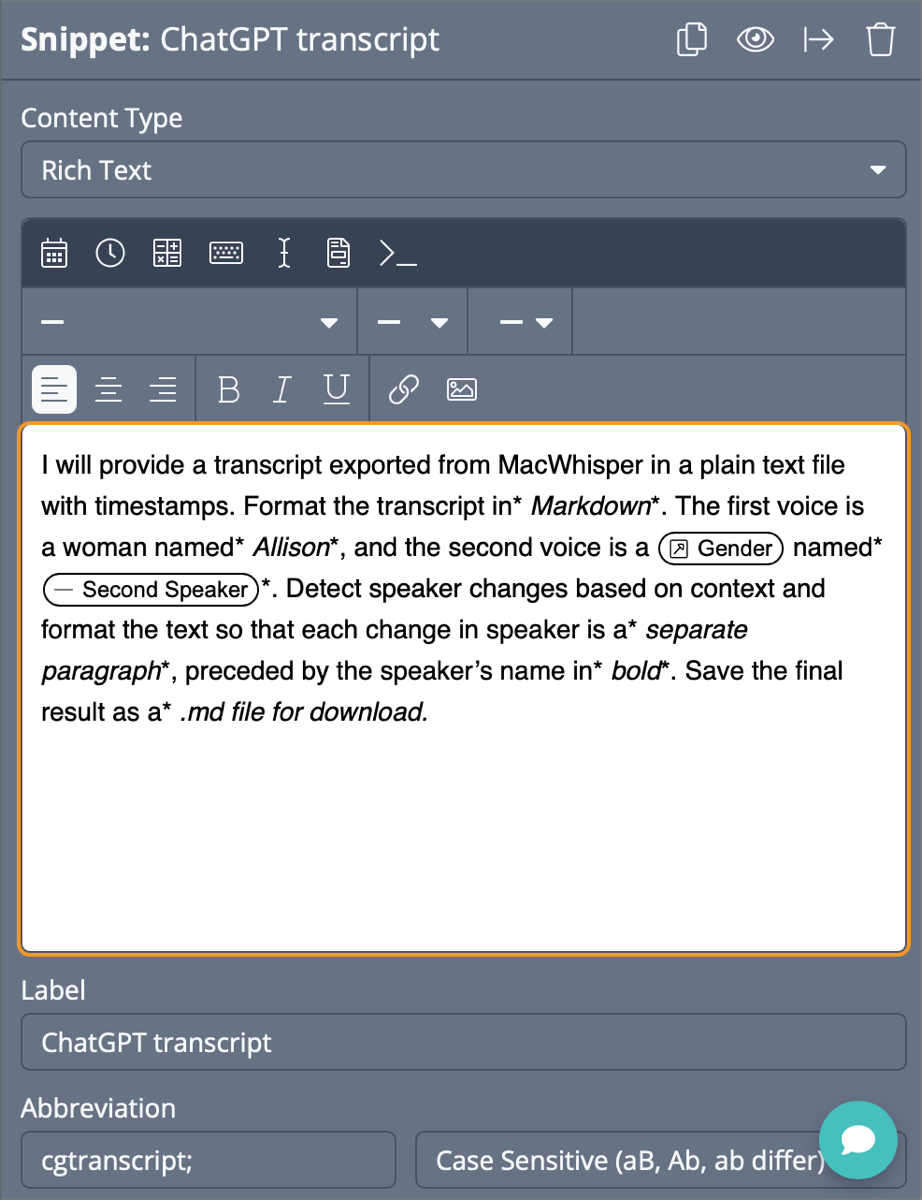

I’d come up with the correct method but after a lot of back and forth with ChatGPT, so I asked it to write me a single, consolidated prompt that included everything we’d decided on to come up with the results. The resulting prompt was:

I will provide a transcript exported from MacWhisper in a plain text file with timestamps. Format the transcript in **Markdown****. The first voice is a woman named ***Allison****, and the second voice is a man named* ***Franci Zidar****. Detect speaker changes based on context and format the text so that each change in speaker is a* ***separate paragraph****, preceded by the speaker’s name in* ***bold****. Save the final result as a* .md file for download.

It gave me the prompt as a Markdown file, and then suggested I create a TextExpander snippet for it. This was a great idea. It won’t always be a man, and they’re very unlikely to be named Franci Zidar, but I can fix that in TextExpander. I copied the prompt into TextExpander, made a dropdown to change gender, and added a text input field for the second speaker’s name instead of using Franci’s name. The original prompt had a lot of leftover asterisks where I think it was trying to designate bold and italics. I left them in just in case it meant something to ChatGPT.

Will It Work More Than Once?

My initial test of this idea was the interview with Franci about Petal and while he had a cool accent, it was pretty close to American English. I decided to try my shiny new TextExpander snippet prompt for ChatGPT on a more challenging interview — the one with Paweł Elbanowski about StethoMe. He has an awesome Polish accent. Would you believe it separated our voices perfectly from the text-only transcript?

ChatGPT took one minute and forty-five seconds to add the names after I gave it the text transcript from MacWhisper. That work would have been at least 15 minutes to do by hand, even for a short 3.5-minute interview. I did listen using the player from MacWhisper while I was checking the transcript but it made no errors in assigning names.

In this particular interview, PaWeł often started and stopped his sentences, repeating a little bit of it, and I noticed that MacWhisper removed all of the duplications and created clear sentences that conveyed what he meant to say. It also took out all of my “ums” and “uhs” and made me sound much better in text than I was in the audio.

I tried the TextExpander snippet again in ChatGPT for the interview with Tucker Jones from Roam, and it made one mistake, swapping in his name where it should have been mine. It’s not flawless, but a lot less work and less tedious work than assigning the names by hand in MacWhisper.

Bottom Line

The bottom line is that while it is a bit of work to create these transcripts, I had a lot of fun learning the tools to do it. I especially enjoyed learning how to automate even more of the process. MacWhisper is really great at doing the initial transcription and it does it all locally on my machine, not burning half a coal mine every time I run it. Jordi and Ian on support are very responsive to my questions which is awesome (and I had a lot of questions!)

After I’d put the transcripts on a few of the videos, I asked my hearing-impaired buddy Niraj what he thought. He told me I could quote his response:

Oh for sure, the transcripts of the interviews are absolute gold !

Wait! This just in!

I discovered days after the information I gave you that I can automatically create the transcripts with speaker names all within MacWhisper itself. Let me explain how I discovered this.

I have MacWhisper on both my MacBook Air and my MacBook Pro and the app was acting very differently on the two machines, and the interface looked different. On my MacBook Air, after I dragged in an audio file, it would create and display the transcription as I’ve just described. From there I could massage it in different ways.

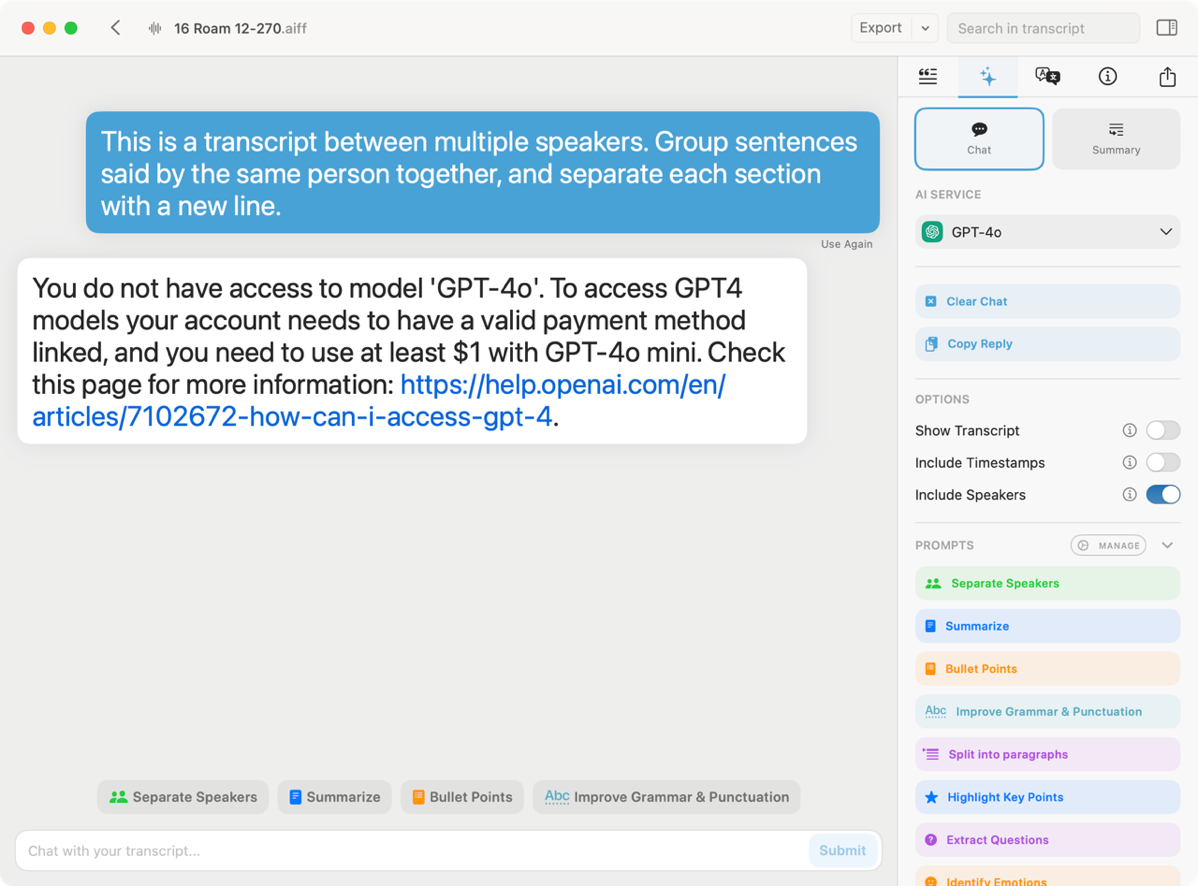

But on my MacBook Pro, I saw a very different interface — one with colorful buttons saying things like “summarize” and “separate speakers”. I confirmed that I was running the same version on both Macs. Oh well, that button to separate speakers sounded nifty, so I pushed it.

I was surprised to be confronted by a message telling me I don’t have access to the GPT-4o model. The full message said:

You do not have access to model ‘GPT-4o’. To access GPT4 models your account needs to have a valid payment method linked, and you need to use at least $1 with GPT-4o mini. Check this page for more information: https://help.openai.com/en/ articles/7102672-how-can-i-access-gpt-4.

This was very confusing to me because, in MacWhisper’s settings, you can put in your API key for ChatGPT, which I’d already done. I pay $20/month for ChatGPT Plus so I know I’ve met the money requirement too.

I wrote to Jordi and he explained the problem:

To use the ChatGPT features you will need to add your own OpenAI API key. That API key also needs to have a valid payment method linked to it. Note that this is not the same as a ChatGPT Plus subscription (it’s confusing I know and I’ve written OpenAI before about this).

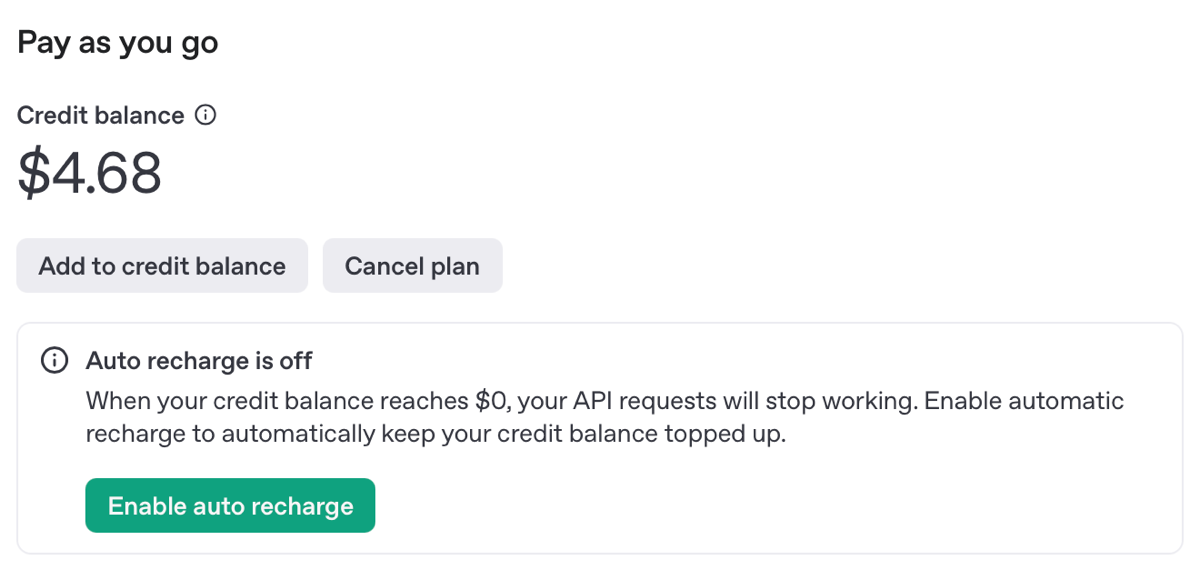

Well, that’s a horse of a different color, isn’t it? I ended up having to ask ChatGPT how to attach a credit card to my API key and I authorized it for $5 (the minimum). I unchecked the recurring expense part because I had no idea how much this was going to cost. I did not want to give OpenAI a blank check.

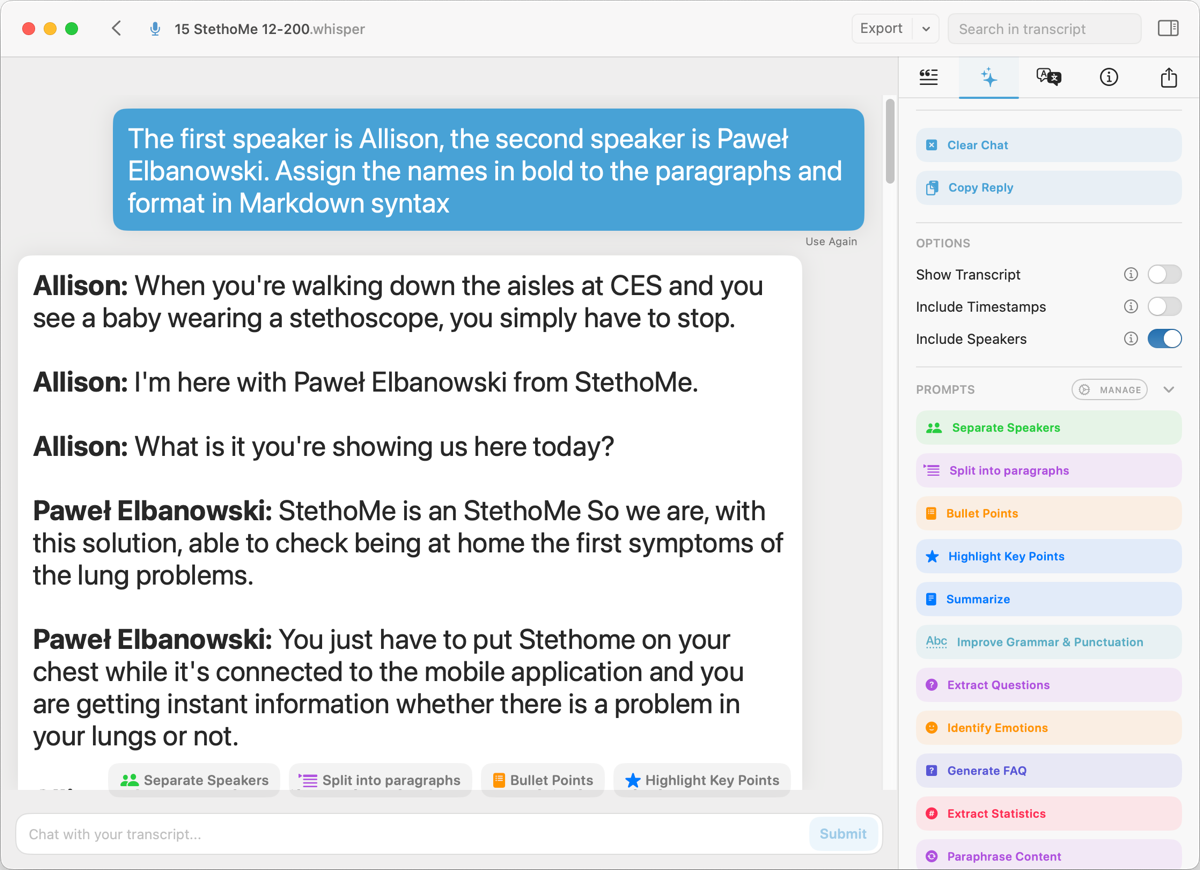

Back in MacWhisper, I dragged in an audio file, typed in a prompt telling it the speaker names and asked that it assign the names in bold and make it in Markdown format. I then pushed the bright green button that said Separate Speakers … and it worked!

It didn’t look like Markdown format, and while manual copying and pasting didn’t work properly, I finally noticed a “copy reply” button on the right. Pasting from that action did give me lovely Markdown formatting perfectly.

There’s one rather significant downside to using ChatGPT from within MacWhisper. Unlike when using the standalone app, ChatGPT inside MacWhisper doesn’t appear to remember anything from prompt to prompt. It forces you to get better at writing prompts, that’s for sure.

You might be asking yourself how much I got soaked by OpenAI on my API key account. After running one prompt that resulted in separated speakers, I raced over to billing on Open AI and to my delight, discovered it cost me a grand total of 8 cents. I played with it a lot after that, probably running it maybe 20 more times, and I was down 32 cents.

I realized an oversight from my original article — I forgot to test MacWhisper with VoiceOver. I found that it was possible to navigate and select items, and buttons were labeled. However, the interface is unusual enough that without being able to see everything in a window, it would be pretty hard to follow what’s going on with this app. I think with maybe 5-10 minutes of explanation by a sighted person, my blind friends could get the hang of it, but ideally, they wouldn’t need that kind of hand-holding.

Let me end with a confession. Remember how I said I didn’t see these pretty buttons that said things like “separate speakers” on my MacBook Air? I had to confess to Jordi that I was looking on different tabs on the two computers.

The (hopefully) final bottom line is that I’m still getting the hang of using MacWhisper and all of the cool things it does and trying to understand all of the different pieces of ChatGPT, but I’m sure having a lot of fun. I think I now have a pretty streamlined process to bring Niraj his transcripts.

Do you find that manually creating subtitles with MacWhisper leads to a noticeable improvement in accuracy compared to YouTube’s automatic captions?